Introduction

In the age of cloud computing, managing vast amounts of data efficiently and cost-effectively is a top priority for organizations of all sizes. Amazon Web Services (AWS) offers a robust solution for this challenge through Amazon S3 (Simple Storage Service). AWS S3 is a scalable and durable cloud storage service used to store and retrieve data. To further optimize data management, AWS provides a feature known as S3 Lifecycle Management. This powerful tool automates the management of objects stored in S3 buckets, allowing users to optimize their storage costs, meet compliance requirements, and simplify data management. In this article, we will delve into the world of AWS S3 Lifecycle Management, exploring its benefits, configuration options, and real-world applications.

Understanding AWS S3 Lifecycle Management

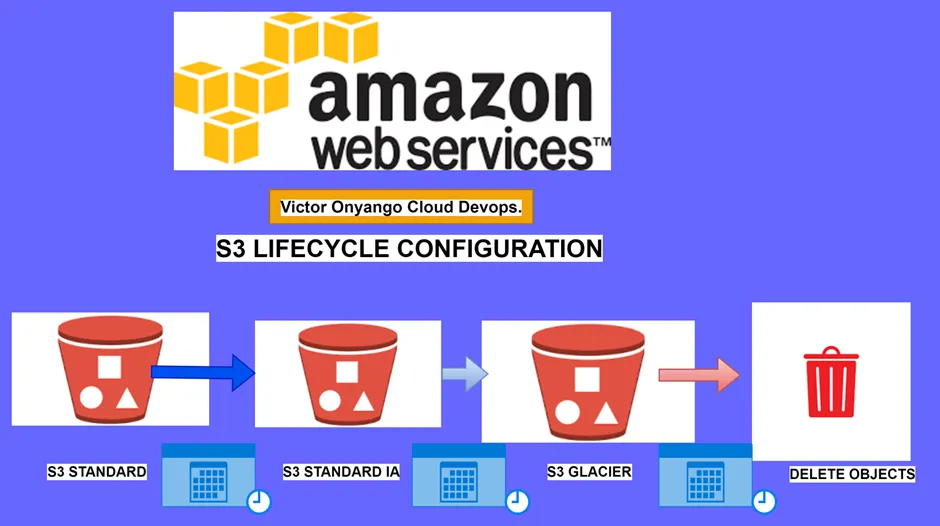

AWS S3 Lifecycle Management is a set of rules and policies applied to S3 objects to automate their lifecycle. This automation is particularly valuable when managing large volumes of data, as it helps keep storage costs in check, ensures data durability, and streamlines data management.

The key components of S3 Lifecycle Management include two types of actions

Transition actions: These rules define when objects should transition from one storage class to another. You can specify actions such as moving objects from the standard storage class to the infrequent access (IA) storage class after a certain number of days or changing the storage class to Glacier for archiving purposes.

Expiration actions: Expiration rules define when objects should be deleted from S3. You can set expiration based on a specific number of days since the object’s creation or the date when it was last modified.

Benefits of AWS S3 Lifecycle Management

Cost Optimization.

Data Durability.

Compliance and Data Retention.

Simplified Data Management.

Real-World Applications

Data Backup and Archiving: you can set up rules to transition objects to Glacier after 90 days, ensuring data durability and cost savings.

Log Data Management: Organizations can automatically move logs from the standard storage class to Glacier after a certain period, saving on storage costs while maintaining compliance with data retention policies.

Content Delivery: In scenarios where you store content for your website or application in S3, you can use S3 Lifecycle Management to move old or infrequently accessed content to a more cost-effective storage class.

Creation of Lifecycle rule

sign-in to your AWS Management Console.

Type S3 in the search box, then select S3 under services.

In the Buckets list, choose the name of the bucket that you want to create a lifecycle rule.

From the above screen, we observe that the bucket is empty. Before uploading the objects in a bucket, you can choose to first lifecycle rule.

Choose the Management tab, and choose the Create lifecycle rule.

Under Lifecycle rule name, enter a name for your rule, and call it demolifecyclerule. always remember the name must be unique within the bucket.

Choose the scope of the lifecycle rule, I will select apply to all objects so select the radio button then Scroll down.

Under Lifecycle rule actions, choose the actions that you want your lifecycle rule to perform, and remember depending on the actions that you choose, different options appear.

I will start by transitioning current versions of objects between storage classes.

Then under choose storage class transitions, choose standard-IA, for days after object creation a minimum of 30 days is required, so select 30 days.

Then click add to add another transition.

In the other transition, I will now move to intelligent tiering, and here a minimum of 90 days is required, so I will enter it.

Next, I will choose Transition non-current versions of objects between storage classes: move to that tab then choose the storage class to transition to.

Then To permanently delete previous versions of objects, under Permanently delete noncurrent versions of objects, in Days after objects become noncurrent, enter the number of days. You can optionally specify the number of newer versions to retain by entering a value under.

Review then click Create rule.

Success.

clean up.

stay tuned for more.

A skilled Cloud DevOps Engineer and Solutions Architect specializing in infrastructure provisioning and automation, with a focus on building scalable, fault-tolerant, and secure cloud environments.